Create

Trustworthy AI

World’s first comprehensive evaluation and optimization platform to help enterprises achieve 99% accuracy in AI applications across software and hardware.

Integrated with

Faster AI Evaluation

Faster Agent Optimization

Model and Agent Accuracy in Production

LLMs are probabilistic.

Build, Evaluate and Improve AI reliably with Future AGI.

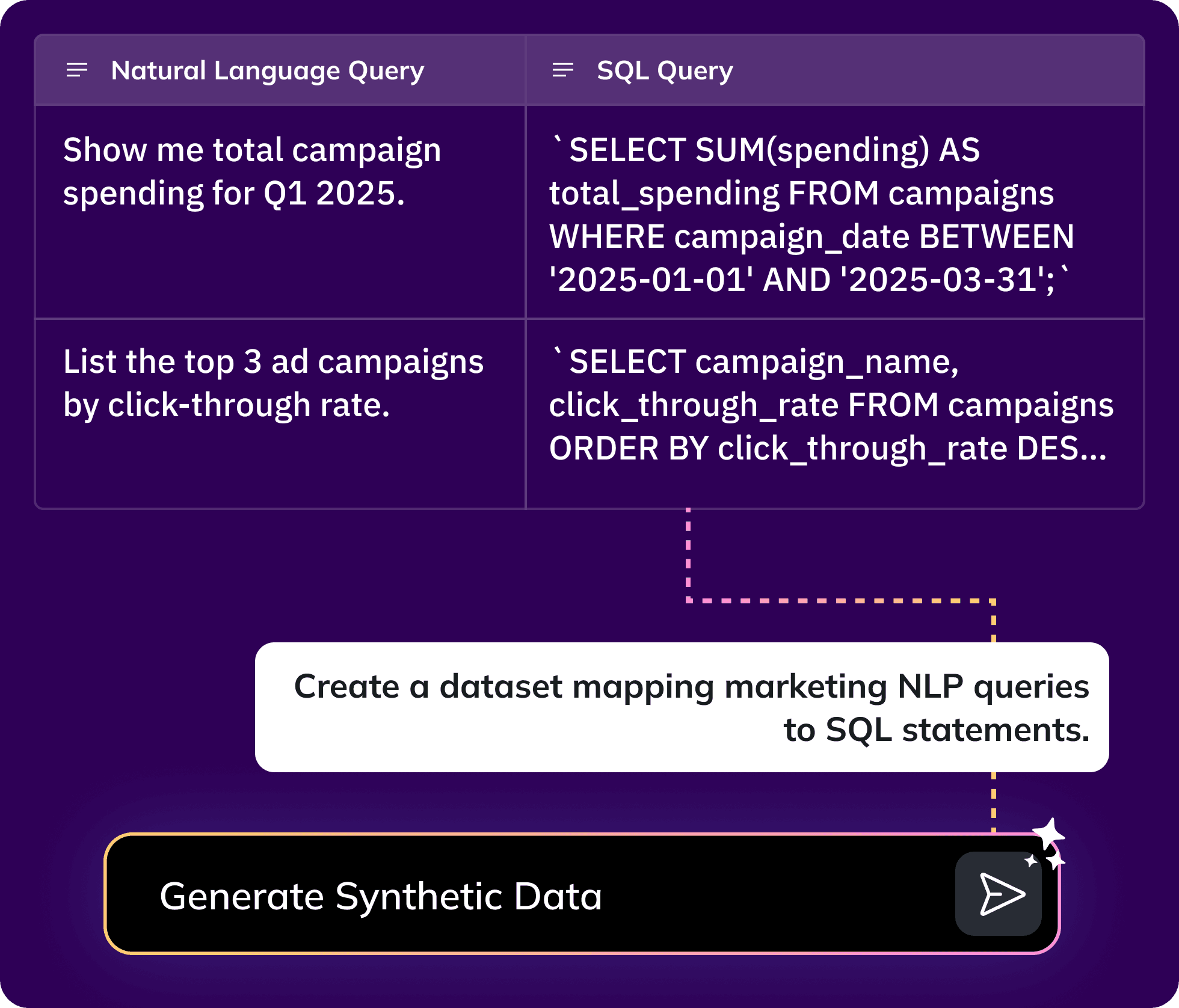

Generate and manage diverse synthetic datasets to effectively train and test AI models, including edge cases.

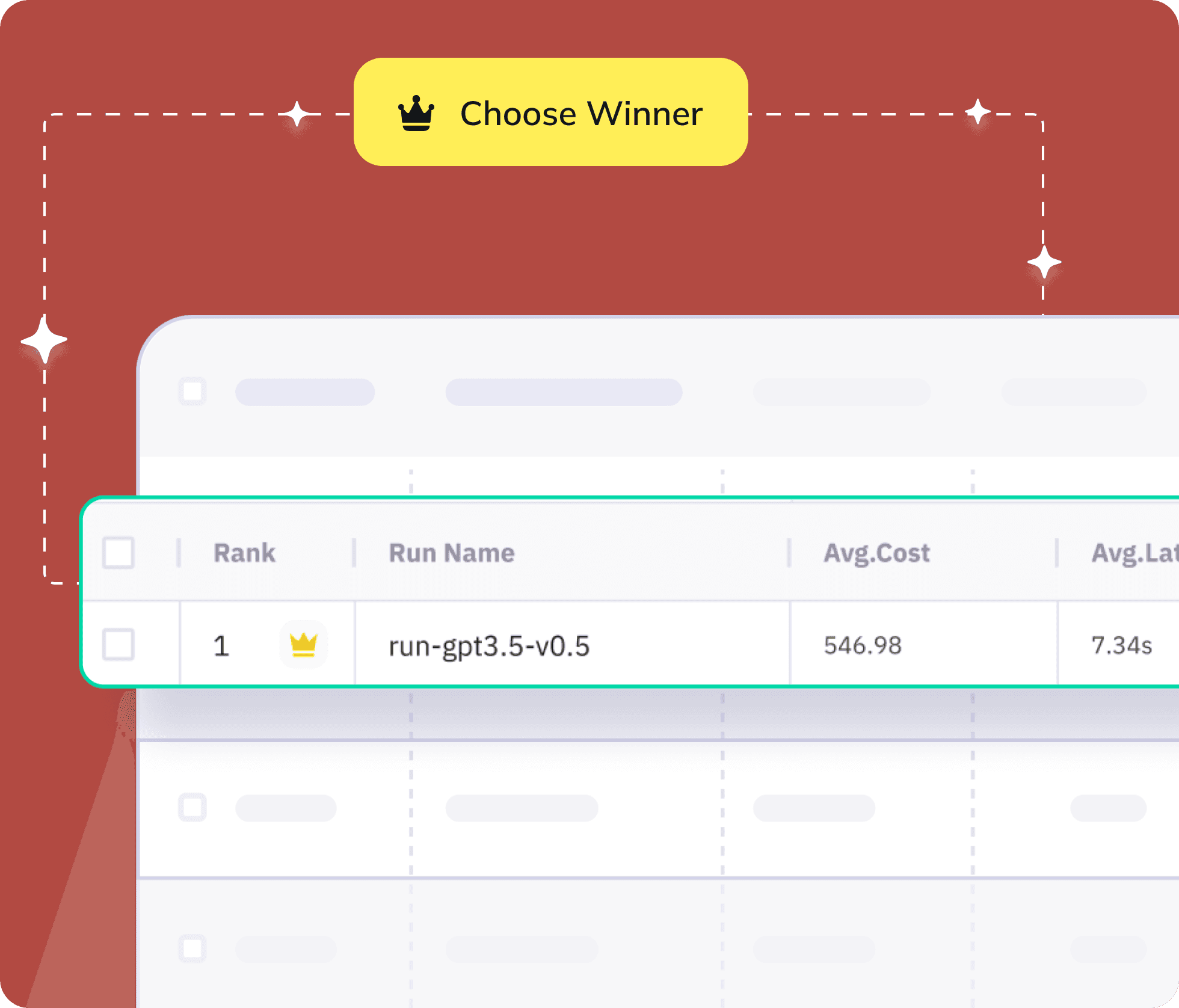

Test, compare and analyse multiple agentic workflow configurations to identify the ‘Winner’ based on built-in or custom evaluation metrics- literally no code!

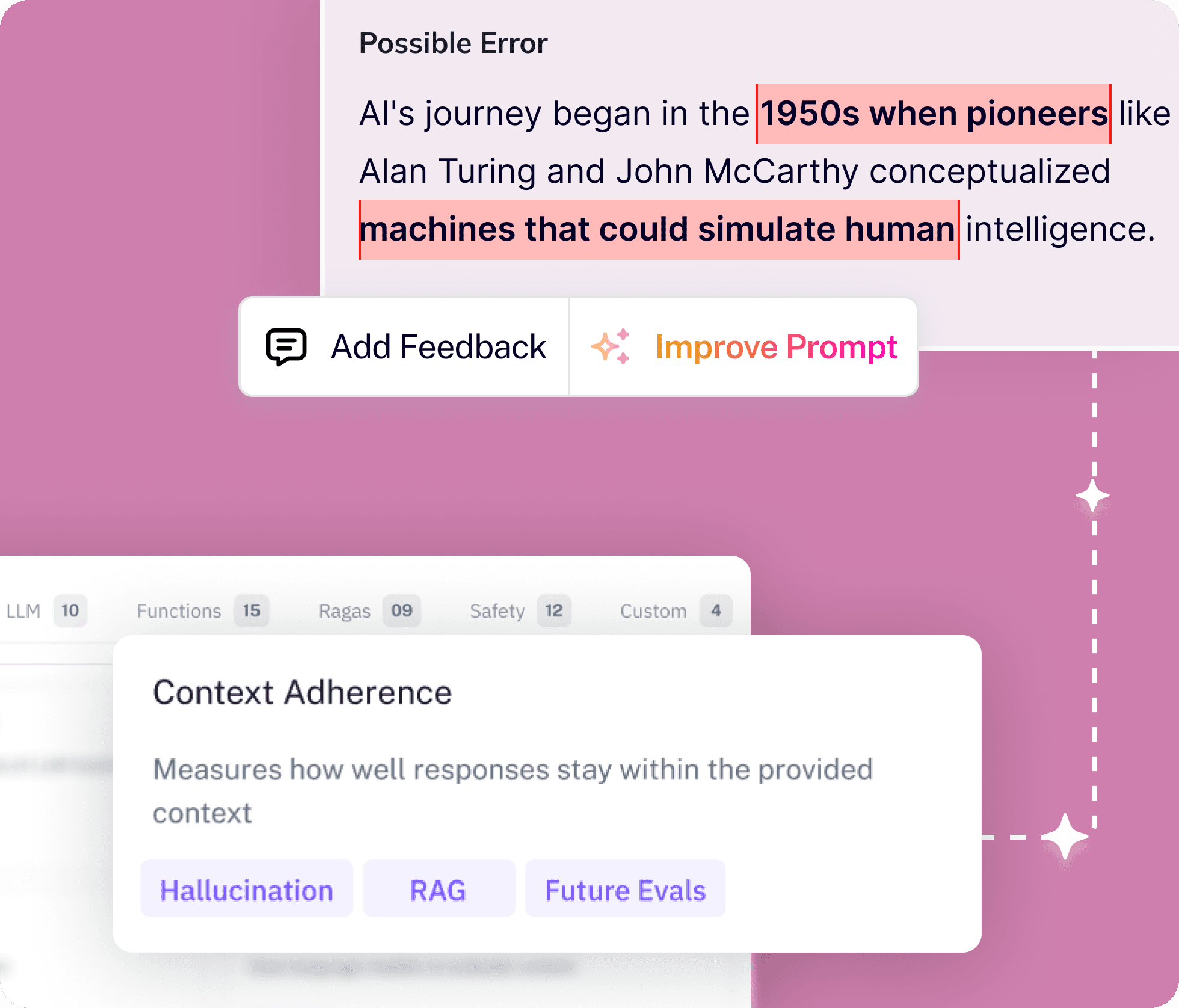

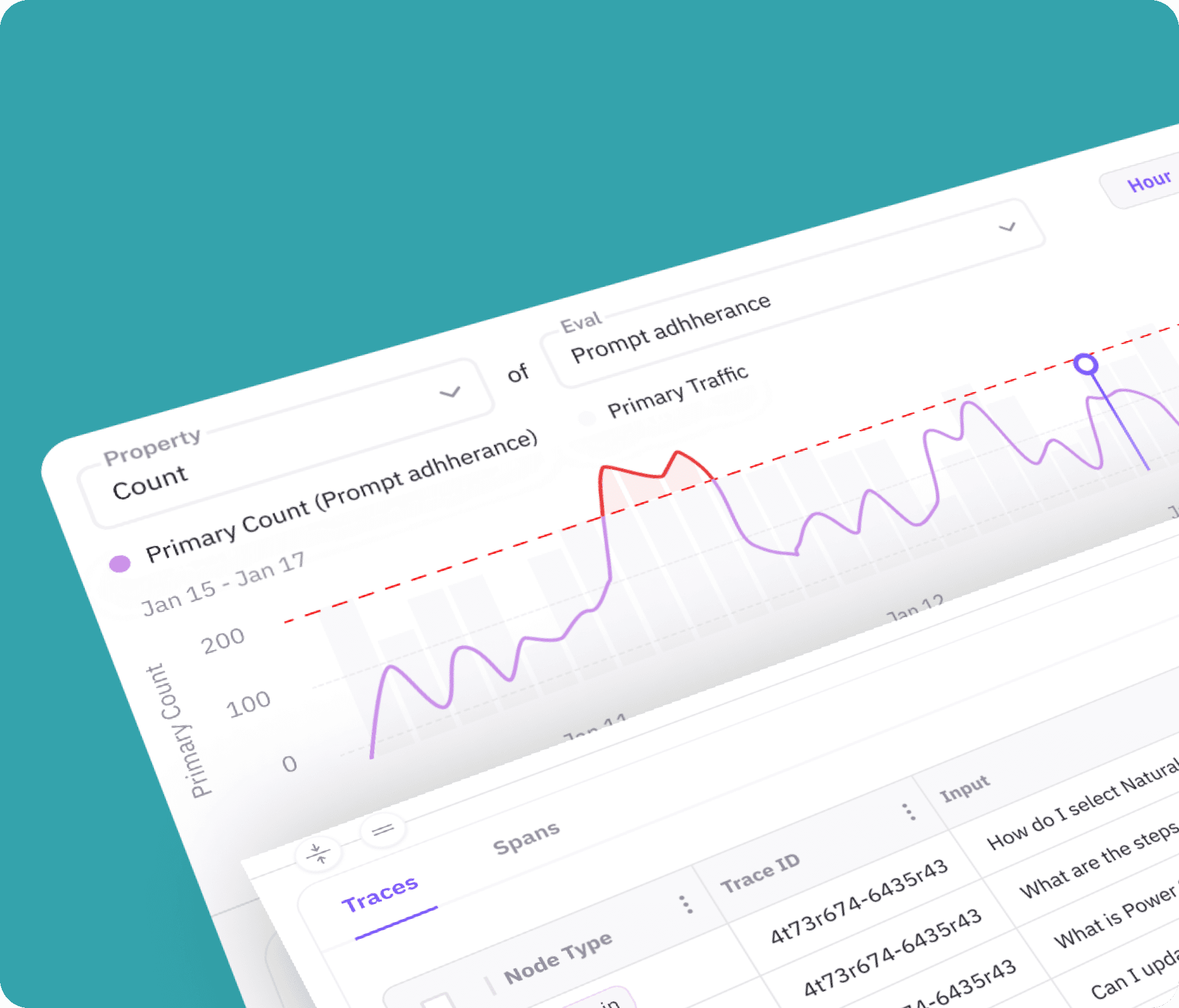

Assess and measure agent performance, pin-point root cause and close loop with actionable feedback using our proprietary eval metrics.

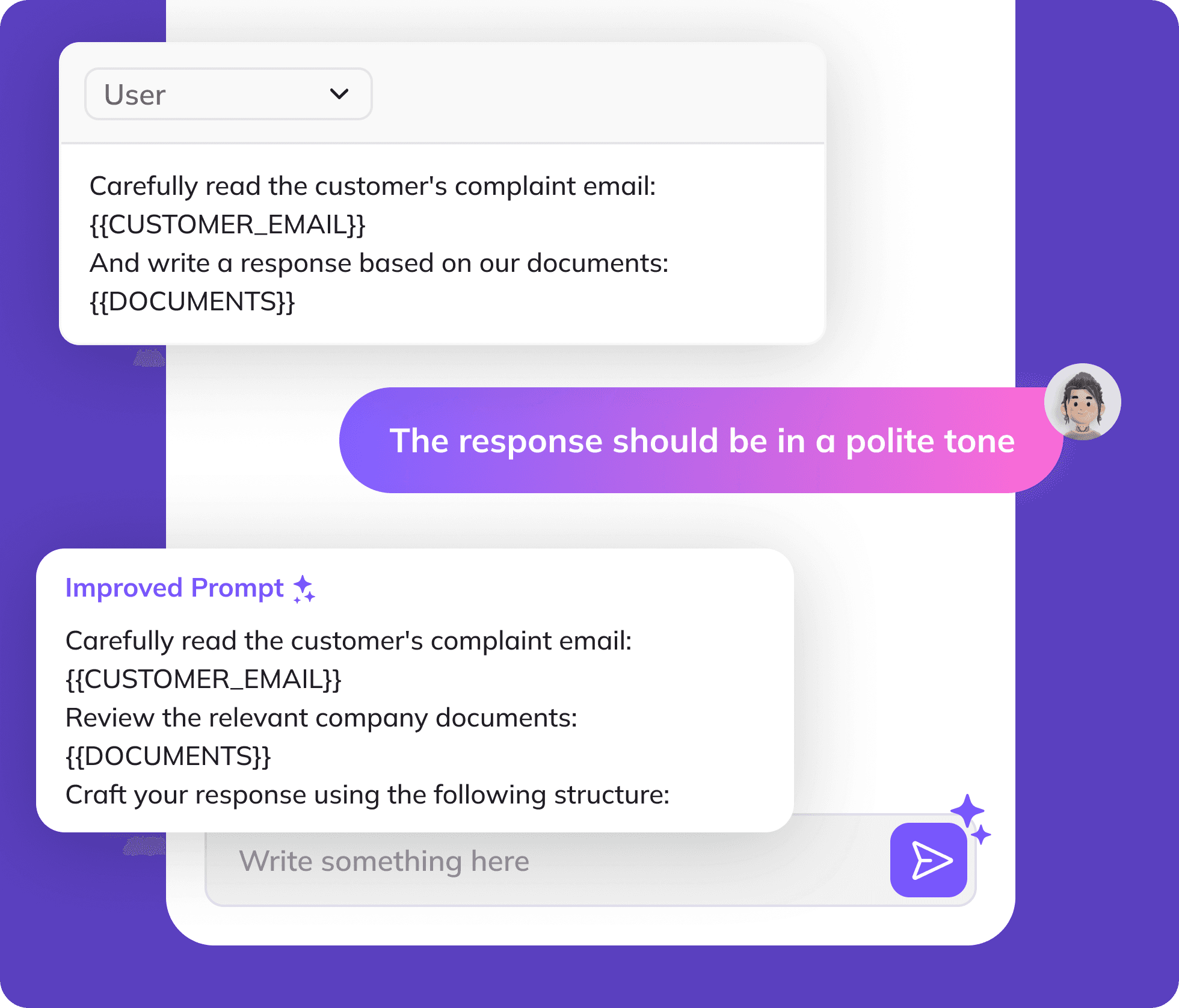

Enhance your LLM application's performance by incorporating feedback from evaluations or custom input, and let system automatically refine your prompt based.

Track applications in production with real-time insights, diagnose issues, and improve robustness, while gaining priority access to Future AGI's safety metrics to block unsafe content with minimal latency.

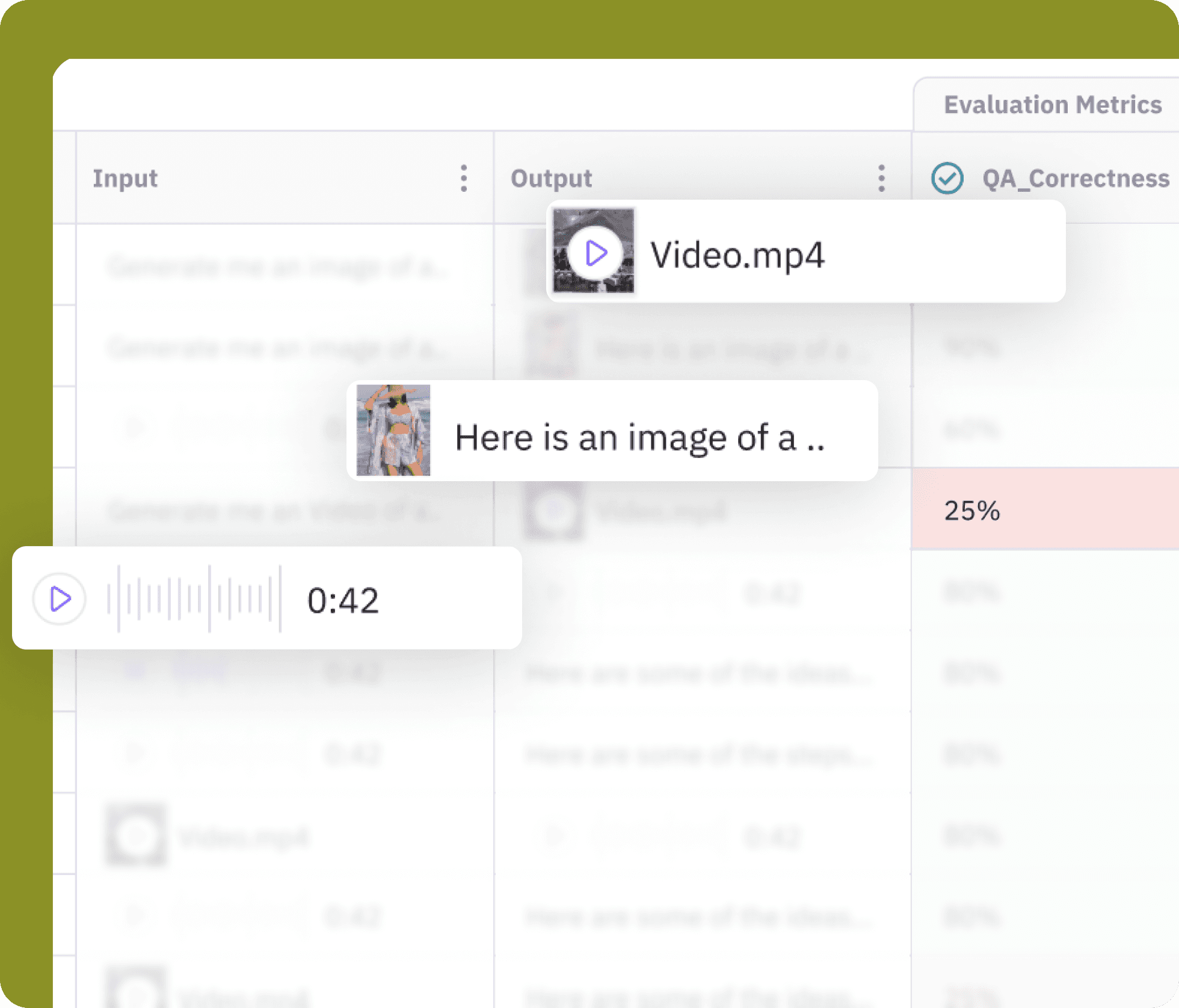

Evaluate your AI across different modalities- text, image, audio, and video. Pinpoint errors and automatically get the feedback to improve it.

Integrate into your Existing Workflow

Future AGI is developer-first and integrates seamlessly with industry-standard tools, so your team can keep their workflow unchanged.

from fi.integrations.otel import OpenAIInstrumentor, register from fi.integrations.otel.types import ( EvalName, EvalSpanKind, EvalTag, EvalTagType, prepare_eval_tags, ) from openai import OpenAI # Configure trace provider with custom evaluation tags eval_tags = [ EvalTag( eval_name=EvalName.DETERMINISTIC_EVALS, value=EvalSpanKind.LLM, type=EvalTagType.OBSERVATION_SPAN, config={ "multi_choice": False, "choices": ["Yes", "No"], "rule_prompt": "Evaluate if the response is correct", }, ) ] # Configure trace provider with custom evaluation tags trace_provider = register( endpoint="https://app.futureagi.com//tracer/observation-span/create_otel_span/", eval_tags=prepare_eval_tags(eval_tags), project_name="ANTHROPIC_TEST", project_version_name="v1", ) # Initialize the Anthropic instrumentation OpenAIInstrumentor().instrument(tracer_provider=trace_provider) client = OpenAI() completion = client.chat.completions.create( model="gpt-4o", messages=[ {"role": "developer", "content": "You are a helpful assistant."}, {"role": "user", "content": "Write a haiku about recursion in programming."}, ], ) print(completion.choices[0].message)